REIMAGINE LIVE PRODUCTION

WITH PIXOTOPE

Unleash the power of real-time virtual sets and augmented reality.

Our virtual production solution, Pixotope Graphics VS/AR Edition, transforms your broadcasts into visually stunning experiences.

Whether you're producing live news, sports, or entertainment, Pixotope helps you to stand out.

Pixotope's engine elevates your visuals with realistic lighting, shadows, and reflections.

Seamlessly integrate virtual and tracked cameras, leveraging Unreal Engine's full animation toolset.

Enhance your scenes with translucent objects like virtual glass and atmospheric elements like fog and water.

No more struggling with multiple panels or complex setups.

With Pixotope, you can control and manage all the technical aspects of your production - cameras, signals, renders, Chroma Keyer, and Color correction from a single, intuitive interface.

Time truly is money in the world of production. Our VS/AR Edition has one primary goal: to save you time. But what sets us apart is how easy it is, even for complete beginners.

Spend less time setting up, optimizing, and streamlining your workflow to achieve your results efficiently.

Experience the ultimate power and flexibility of our comprehensive API designed specifically for the Unreal Engine.

Our API seamlessly integrates and automates data, enabling you to optimize your projects effortlessly.

With remote access to every aspect of the engine, you have the freedom to work from anywhere, at any time.

See real-time updates and modifications as you fine-tune every aspect of your content, eliminating guesswork and uncertainty.

What you see on the screen is precisely what your audience will see, guaranteeing a seamless and captivating end result.

Effortlessly transition between different levels and scenes in real-time, without interruptions or delays.

Pixotope reduces downtime between shows by enabling instant switching between sets, allowing you to quickly change the look and feel of each show.

All rendering and internal compositing are done inside Pixotope's customized render pipeline of the Unreal Engine.

We have optimized the render pipeline to allow for very efficient single-pass rendering that does not compromise the quality of the video.

No more complex nodes, blueprints, or engine settings.

Control everything effortlessly from our user interface. Fine-tune composites, master color grading, and apply video effects with just a few clicks.

Create custom control panels in minutes, not hours. Our intuitive, drag-and-drop interface empowers you to design tailored control panels for mobile and desktop devices.

Seamlessly integrate your engine's elements, from scenes and blueprints to properties and functions, into customizable buttons, sliders, and more.

WHAT OUR CUSTOMERS SAY

"This was our first experience utilizing all aspects of Pixotope for broadcasting delivery. Pixotope is very convenient, the producers don’t need to master too many additional skills and can instead focus on the creative, with the software accurately producing the effect that the designer wants. Any issues we encountered during production were solved by Pixotope’s engineers, with some even traveling to our location to ensure everything worked correctly and offer that next level of personalized service."

”We are extremely excited to add Pixotope to our creative services offering and look forward to pushing new boundaries with it. It’s really important for MOOV to continue investing in the latest and best technologies, ensuring our clients have the right solution for their projects. Pixotope is designed with creativity, speed, and flexibility in mind which in our ever-challenging industry is crucial.”

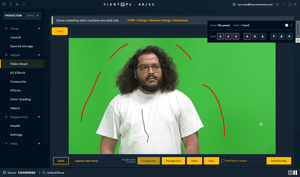

REAL-TIME CHANGES

IN WYSIWYG

With Pixotope, you have the power to make instant tweaks and adjustments to your Unreal scene.

Say goodbye to the headaches of fixing typos and mistakes through the tedious process of baking or packaging the Unreal project.

We prioritize giving operators and designers the confidence they need. That's why Pixotope offers WYSIWYG (What You See Is What You Get) – a one-of-a-kind in-software preview.

This preview includes incoming video feeds, tracking data, and compositing information, ensuring you get the most accurate representation of your production.

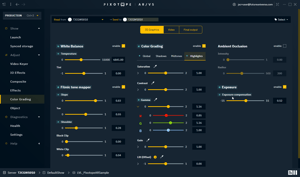

COLOR

CORRECTION

Goodbye, third-party tools – Pixotope puts creative control in your hands. Our robust color grading and image effects tools are seamlessly integrated into your video I/O pipeline, streamlining your workflow and saving you time.

Make precise adjustments that align with your creative and artistic vision, all within the Pixotope environment.

No more jumping between software – achieve your final desired results directly from the Pixotope video output. Experience the freedom to enhance your visuals and bring your creative ideas to life effortlessly with Pixotope.

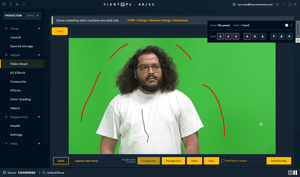

CHROMA KEYER

AND INTERNAL COMPOSITE

FLEXIBLE

PRODUCTION CONTROL

With Pixotope, you have the freedom to control your production in a variety of ways.

You can create multiple control panels to drive specific parts of your show or opt for a single, comprehensive panel that manages every aspect seamlessly.

We also offer integration with third-party devices like Streamdeck or XKeys, enabling you to trigger specific events effortlessly.

And for those seeking the highest level of customization, Pixotope provides access to its API, allowing you to craft your own control system tailored to your creative vision.

ASSET

HUB

We understand the frustration of manually copying files when making project modifications across multiple machines.

With our automatic synchronization tool, your multi-machine setup is always kept up to date seamlessly.

Simply complete your work, hit 'push,' and watch as all your changes are automatically pulled onto the other machines.

It's a game-changer for streamlined workflow efficiency.

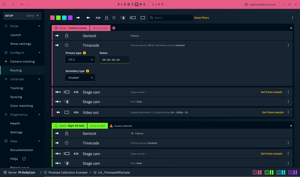

TIME-CODE

TRIGGERING

Take control of precision timing with our powerful timecode-triggering feature.

Seamlessly sync your workflow by utilizing an external LTC (Linear Timecode) or embedded timecode in the video.

3RD PARTY INTEGRATION

AND API

Pixotope empowers users to harness the full potential of our system through our comprehensive API.

With Pixotope API, you have the freedom to create custom integrations tailored to your specific needs.

We prioritize your security, which is why all Pixotope API calls are encrypted, ensuring the highest level of data protection.

Comparison VS/AR vs. XR

Request a Pixotope VS/AR demo

Tell us about your production needs and we'll show you the relevant tools.

FAQs

The Pixotope chroma keyer is a powerful, real-time tool designed for professional use virtual studio productions.

It’s easy to use, works seamlessly with Unreal Engine, and gives you clean, high-quality results - even in demanding environments.

Whether you're creating a virtual set or mixing real and digital elements, it helps make everything look polished and realistic.

- Up and running in 30 seconds

- Make changes just before going to air or even while on-air

- Perfect for last-minute tweaks to the look of your program

- Full support for presets and instant recalls

- Fine-tuning controls for edge refinement and spill suppression

- Works with any input to the engine

- Responds quickly to changes in lighting, in cameras and recovers quickly from changes

Yes! Pixotope is designed to integrate with your existing infrastructure:

- Works with standard broadcast cameras

- Compatible with traditional pedestals, dollies, jibs, cranes, and Steadicams

- Supports industry-standard video formats and protocols

- No need for specialized support systems

Pixotope can work with most industry standard tracking solutions.

We also provide our own tracking solutions: Pixotope Fly, Pixotope Vision, and Pixotope Marker.

- Compatible with all major real-time camera tracking systems

- Supports mechanical, optical, marker-based, and TTL tracking

- No need for dedicated tracking license

- Talent tracking integration for natural interaction with virtual objects

Yes, Pixotope is built with live broadcast production in mind.

It’s trusted by broadcasters worldwide for creating real-time virtual sets and augmented reality graphics during live events.

Key features include:

- Low-latency operation

- Real-time rendering and compositing

- No baking required - you are always working 'live' with Pixotope

- Broadcast-standard video formats support (including UHD,4K in SDI and 2110)

- Integration with newsroom systems and automation workflows

- Single-operator control for multi-camera productions

To run Pixotope Graphics for Virtual Sets and Augmented Reality, you'll need a solid setup -but nothing proprietary or overly specialized. Here's what you'll need:

- A robust graphics workstation or server with a powerful GPU

- Professional video I/O cards (AJA or Blackmagic Design)

- Standard broadcast cameras (no special equipment required)

- Camera tracking system - either by us, or by a supported 3rd party

- Off the shelf hardware - no proprietary boxes required

Check the full system requirements here:

Yes, it does, through our partnership with Erizos, and their Erizos Studio platform.

- MOS protocol based workflow support

- Template-based, rundown-driven workflows

- Automation-driven playout capabilities

- Single operator can control VS, AR, XR, and CG graphics

- HTML5 based plugin to most common Newsroom systems in the market